Web applications are critical to business operations, and ensuring their availability and performance is essential for providing an optimal user experience. Load balancing is one of the most effective techniques for managing network traffic by distributing user requests across multiple servers to avoid overloading any single one. This process not only improves availability and scalability but also optimizes resource utilization, resulting in a better overall user experience.

Applications, especially those that face variable traffic and load spikes, such as e-commerce platforms during sales campaigns or special events, require efficient load balancing. Without it, a single server could become overwhelmed, leading to slow response times or even service outages. Load balancing distributes incoming traffic in a way that makes the best use of available resources, maximizing performance and ensuring that every user has a seamless experience.

Load Balancing Architectures: Static vs. Dynamic Algorithms

Load balancing algorithms can be classified into two main categories: static and dynamic. Each has its own advantages and is used in different scenarios depending on system requirements and load conditions.

Static Algorithms: Simplicity and Predictability

Static algorithms make decisions based on predefined rules without considering the current state of the servers. While easy to implement, they have limited adaptability to fluctuations in traffic.

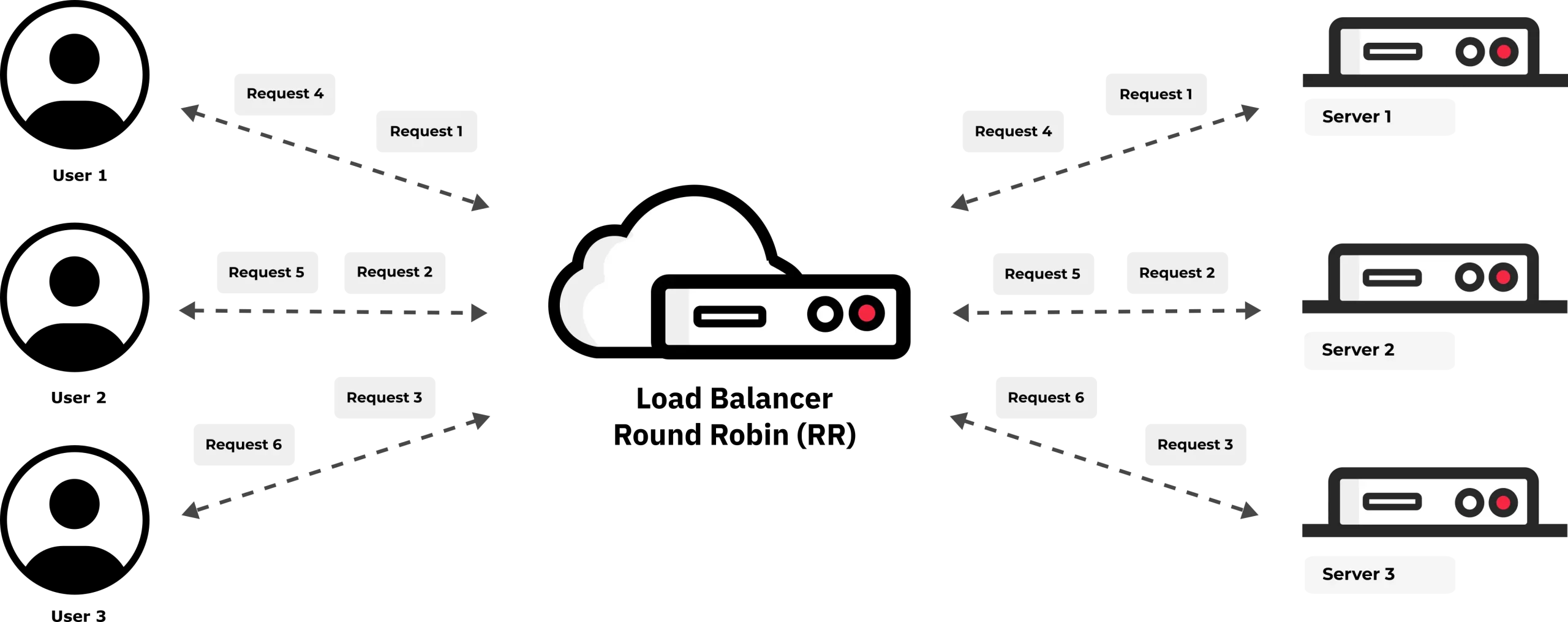

Round Robin (RR)

Round Robin is one of the most popular algorithms. User requests are distributed evenly across all servers in a rotating manner. The first request goes to the first server, the next to the second server, and so on until the last server, after which the cycle restarts.

Advantages:

- Simplicity: Easy to implement and suitable for environments where servers are similar and traffic load is constant.

- Uniform distribution: Ensures that no server is left without traffic.

Disadvantages:

- Does not take into account the current load on the servers, potentially leading to overload if server capacities differ.

Use Case: Environments where applications have consistent load and homogeneous servers, such as static content servers or applications that don’t require intensive processes.

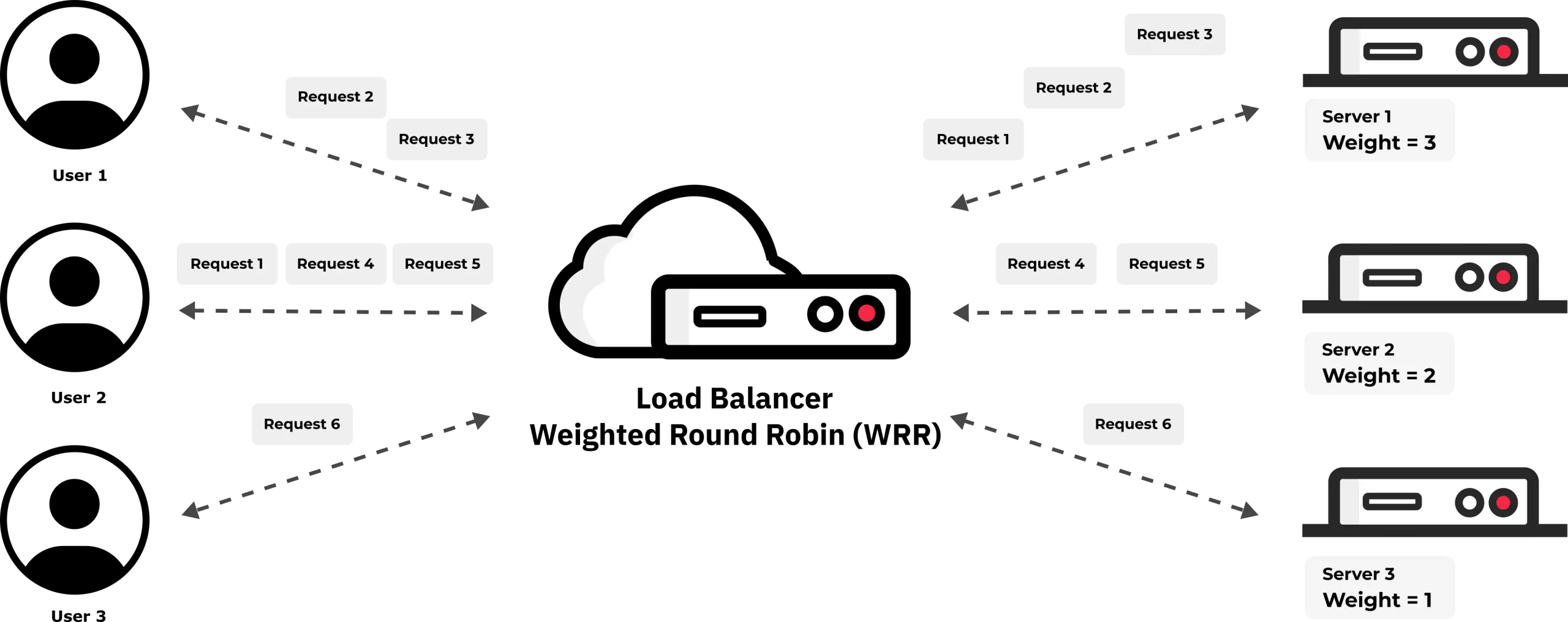

Weighted Round Robin (WRR)

This algorithm is a variation of Round Robin, but with the addition of assigning a “weight” to each server, meaning some servers will receive more traffic than others. The weight reflects the server’s capacity. For example, a server with a weight of “2” will handle twice the number of requests as a server with a weight of “1.” This ensures that work is distributed according to the capacity of each server.

Advantages:

- Efficient distribution: Adjusts for servers with different capacities.

- Scalability: Ideal for environments with servers that have different resources.

Disadvantages:

- Still doesn’t consider the current load of servers, only their initial weight.

Use Case: Ideal for situations where servers have different capabilities (e.g., more powerful servers for more complex tasks).

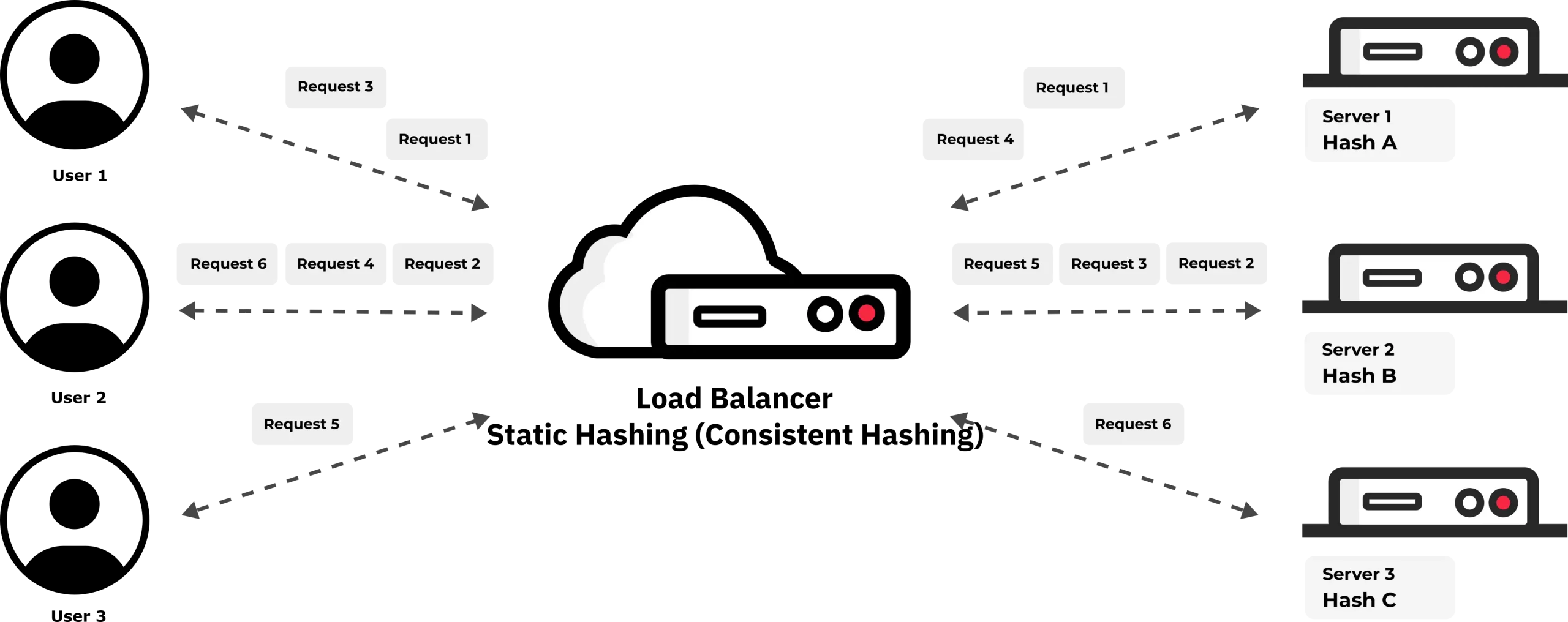

Static Hashing (Consistent Hashing)

In this algorithm, requests are assigned to servers based on a key calculated by a hash function. A mathematical function (the “hash”) converts something unique about the request (like the user’s IP address or the requested URL) into a number, and that number determines which server the request goes to. The interesting part is that if you make another request, it will likely be assigned to the same server, which is useful for maintaining consistency, especially if session data needs to be remembered.

Advantages:

- Session persistence: Users are always directed to the same server.

- Scalability: Ideal for distributed systems, such as CDNs.

Disadvantages:

- Uneven distribution: If not implemented correctly, some instances might become overloaded.

Use Case: Ideal for applications that need users to maintain their active session, such as e-commerce platforms.

Dynamic Algorithms: Adaptability and Real-Time Optimization

Dynamic algorithms adjust traffic distribution based on the current state of the servers, such as CPU load, memory usage, and response time. Though more complex to implement, they offer better optimization and adaptability.

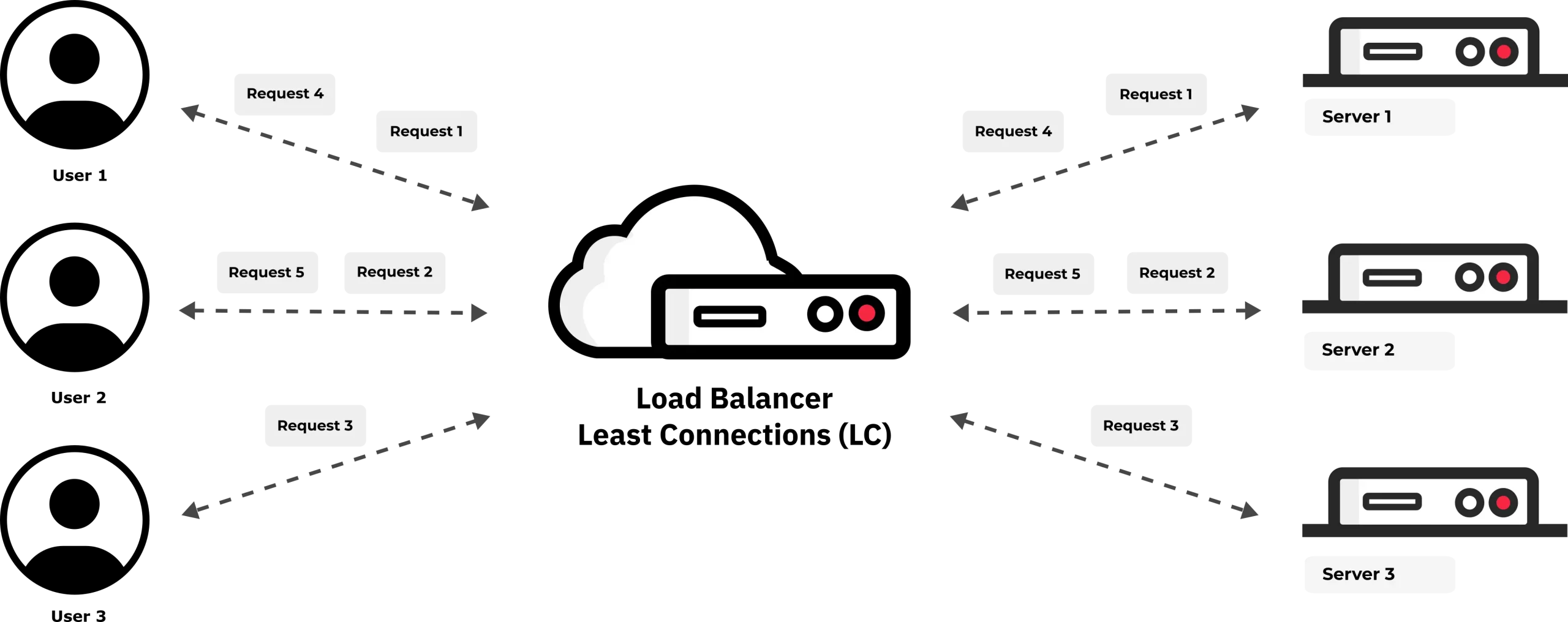

Least Connections (LC)

This algorithm directs requests to the server with the fewest active connections at the moment. If a server is “free” (i.e., has fewer users connected), it will receive the next request. The load balancer is always monitoring how many connections each server has, ensuring that no server becomes overloaded.

Advantages:

- Even distribution: Ensures servers are not overwhelmed.

- Efficiency: Ideal for environments with many simultaneous connections.

Disadvantages:

- Doesn’t account for server capacity, only the number of connections.

Use Case: Useful for platforms that maintain persistent connections, such as live chat or streaming services.

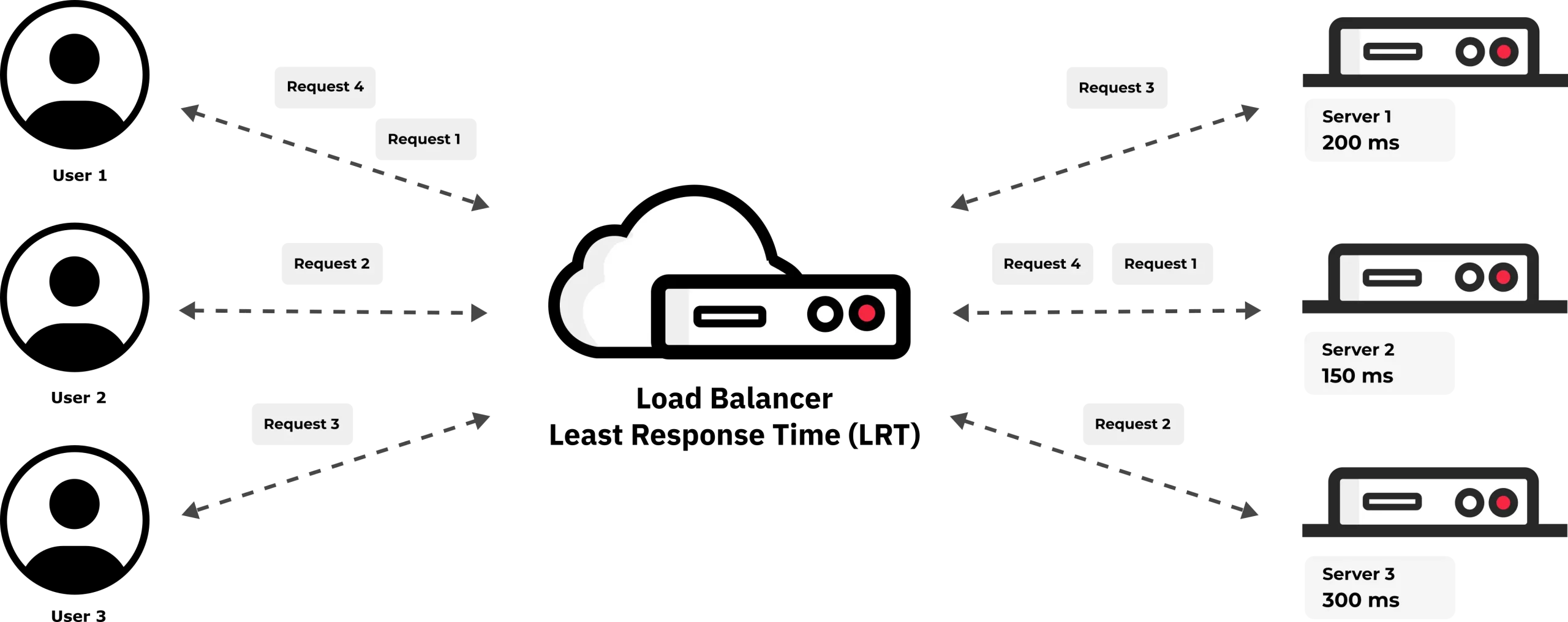

Least Response Time (LRT)

Similar to Least Connections, but it also takes into account the server’s response time. Requests are sent to the server with the fastest response time. The load balancer measures both the number of connections and the time each server takes to respond, selecting the server that, on average, is responding the quickest. This algorithm aims to optimize user experience by directing traffic to the server that can process requests the fastest.

Advantages:

- Performance optimization: Chooses the fastest server to improve user experience.

- Efficiency: Ideal for applications sensitive to response time, like e-commerce.

Disadvantages:

- Doesn’t consider server load, only response time.

Use Case: Platforms where response speed is critical, such as financial systems or real-time applications.

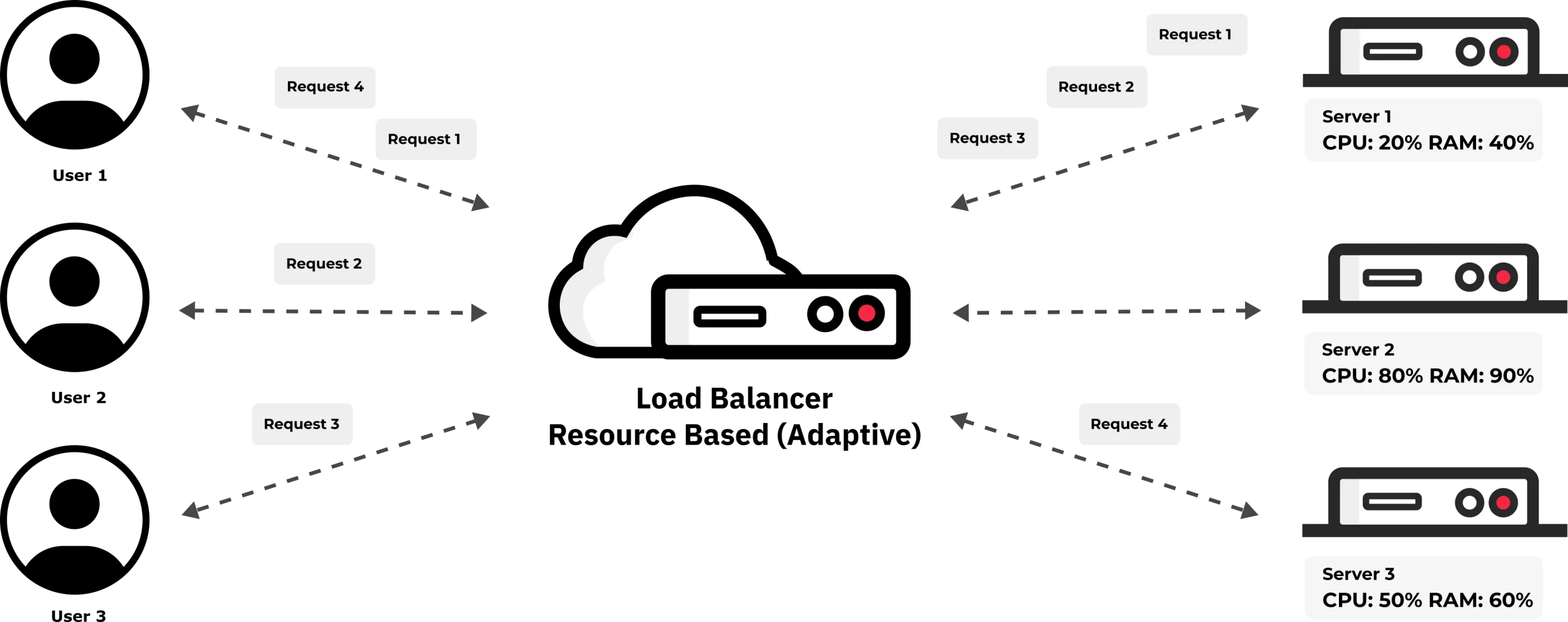

Resource-Based (Adaptive)

In a Resource-Based (Adaptive) algorithm, the load balancer uses real-time data about server resource usage (e.g., CPU, memory, I/O) to redirect traffic to servers with higher resource availability. This allows for better optimization of infrastructure performance.

This approach is akin to having sensors on each machine constantly monitoring its state (CPU, memory, I/O). The load balancer uses this information to direct requests to servers that have the resources to handle the load efficiently.

Advantages:

- Performance optimization: Directs traffic to servers with available resources, improving performance.

- Adaptability: Dynamically adjusts traffic distribution based on real-time conditions.

Disadvantages:

- Doesn’t consider server load, only response time.

Use Case: Ideal for cloud platforms where servers may have varying workloads. This approach is useful for applications that require variable resources over time, such as large databases or real-time analytics applications.

SKUDONET: Multi-Algorithm Load Balancing Strategy

At SKUDONET, we understand that each application and its operating environment present unique traffic and performance challenges. That’s why we’ve adopted a multi-algorithm load balancing strategy that combines both static and dynamic algorithms. This combination not only offers flexibility and efficiency but also addresses the shortcomings of individual algorithms, providing a robust solution tailored to each user’s needs.

Our implementation includes a variety of algorithms:

- Weight (Linear Weight-based Connection): A dynamic algorithm that distributes requests evenly among servers based on their capacity, allowing more powerful servers to handle a larger load.

- Source Hash (Source IP and Port Hash): A static algorithm that ensures consistency in directing requests from a specific user to the same server, ideal for session persistence.

- Simple Source Hash (Source IP Hash): A simplified static algorithm for applications that don’t require maintaining the port.

- Symmetric Hash (Return ID and Port Hash): A balanced distribution algorithm that ensures requests from the same client are directed to the same server.

- Round Robin (Sequential Selection): A static algorithm that ensures each server receives its fair share of requests, ideal for environments with similar server capabilities.

- Least Connections (Fewest Connections): A dynamic algorithm that directs new requests to the server with the least number of active connections, maximizing efficiency and real-time responsiveness.

Advantages of Combining Algorithms

By combining these algorithms, SKUDONET offers our users several significant benefits:

- Flexibility and Adaptability: By using both static and dynamic algorithms, we can adapt to different traffic patterns and load requirements, handling specific scenarios (e.g., sudden traffic spikes) more efficiently.

- Improved User Experience: Algorithms like Source Hash and Simple Source Hash ensure session consistency, resulting in a smoother user experience.

- Resource Optimization: Weight and Least Connections ensure servers are used optimally, preventing overload and ensuring requests are distributed based on each server’s actual capacity.

- Failure Recovery: In the event of a server failure, combinations of algorithms like Round Robin and Least Connections quickly redirect traffic to other available servers, maintaining service availability.

- Scalable Performance: With a varied implementation, we can scale based on the needs of different applications, ensuring optimal performance even in high-demand environments.

Choosing a load balancing infrastructure that integrates both static and dynamic algorithms not only enhances operational efficiency but also provides a superior experience for end users. At SKUDONET, we are committed to delivering robust and reliable performance, adapting to the changing needs of our users’ applications.